by WiseOcean | Jun 18, 2023 | AI Chip, Major Trends

Key takeaways

VC investors have already shifted their focus to categories in which startups can carve out AI chip market share. In 2021 and 2022, AI/ML VC deal value in inferencing has become significantly larger than training-focused only, breaking a historical trend.

Both the PC and automotive AI chip markets are growing faster than the data center AI chip market at over 30% each, at this pace they will surpass data center’s market size by 2025.

Edge inferencing is likely to be dominated by existing vendors, all of which are investing heavily in supporting transformers and LLMs. So, what opportunities exist for new entrants? Automotive partnerships could be the hope; second, supplying IP or chiplets to one of the SoC vendors; and, creating customized chips for intelligent edge devices that can afford the cost.

PC and automotive AI chip markets

AI computing remains a major growth driver for the semiconductor industry, and at $43.6 billion in 2022, the market remains large enough to support large private companies. Also, the AI semiconductor market is divided into companies in China and those outside of China, because of the current political circumstance.

Nvidia is clearly the leader in the market for training chips, but that only makes up about 10% to 20% of the demand for AI chips. Inference chips interpret trained models and respond to user queries. This segment is much bigger, and quite fragmented, not even Nvidia has a lock on this market. Techspot estimates that the market for AI silicon will comprise about 15% for training, 45% for data center inference, and 40% for edge inference. The serviceable market for foundation model training will likely remain too small to support large companies, thereby relatively low acquisition offers are possible. Where is the opportunity?

Data center, automotive, and PC, these three sectors take 90% of the AI chip market if excluding the market of smartphones and smartwatches (to prevent data bias from Apple and Samsung), but the data center has 6 vendors taking 99% of market share, that market is saturated.

Both the PC and automotive AI semiconductor markets are growing faster than the data center AI semiconductor market at over 30% each, at this pace they will surpass data center’s market size by 2025.

Inferencing at the Edge

In the past 2 years, we saw a 69.0% decline in year-over-year VC funding for AI chip startups outside of China, VC investors have already shifted their focus to categories in which startups can carve out market share. In 2021 and 2022, AI/ML VC deal value in inferencing has become significantly larger than training-focused only, breaking a historical trend. Also, edge computing demands are driving more commercial partnerships for inference-focused chips than for cloud training chips.

Custom chips and startups can outperform the chip giant on specific inference tasks that will become crucial as large language models are rolled out from cloud data centers to customer environments – Inferencing at the Edge. The term ‘edge’ is referring to any device in the hands of an end-user (phones, PCs, cameras, robots, industrial systems, and cars). These chips are likely to be bundled into a System on a Chip (SoC) that executes all the functions of those devices.

What opportunities exist for new entrants?

Edge inferencing is likely to be dominated by existing vendors of traditional silicon, all of which are investing heavily in supporting transformers and LLMs. So, what opportunities exist for new entrants?

- Supply IP or chiplets to one of the SoC vendors. This approach has the advantage of relatively low capital requirements; let your customer handle payments to TSMC. There is a plethora of customers aiming to build SoCs.

- Find some new edge devices that could benefit from a tailored solution. Shift focus from phones and laptops to cameras, robots, drones, industrial systems, etc. But some of these devices are extremely cheap and thus cannot accommodate chips with high ASPs. A few years ago, many pitches for companies looking to do low-power AI on cameras and drones. Very few have survived. But, edge computing has become more prevalent in the trend of “smart everything”, and computing platforms also extend into wearables such as Mixed Reality headsets, technology advancements always push new possibilities.

- Automotive partnerships could be the hope, this market is still highly fragmented, but the opportunity is substantial. In Q2 2022, edge AI chip startup Hailo announced a partnership with leading automotive chipmaker Renesas for self-driving applications.

As the world is going through a major trend of electrification for decarbonization and automating optimization of energy usage and everything, edge AI chips with the right upstream and downstream partnerships are promising opportunities for investors and startups. The financial downturn may encourage M&A for some startups that align with the product needs of incumbents. Some historical examples are Annapurna Labs’ $370.0 million exit to Amazon and Habana Labs’ $1.7 billion exit to Intel.

References:

Inferring the future of AI chips, Pitchbook

邊緣 AI(Edge AI)的半導體創新機會

by WiseOcean | Sep 29, 2020 | AI Chip

Here is an introduction to the AI chip industry landscape and highlights with selected infographics and resources.

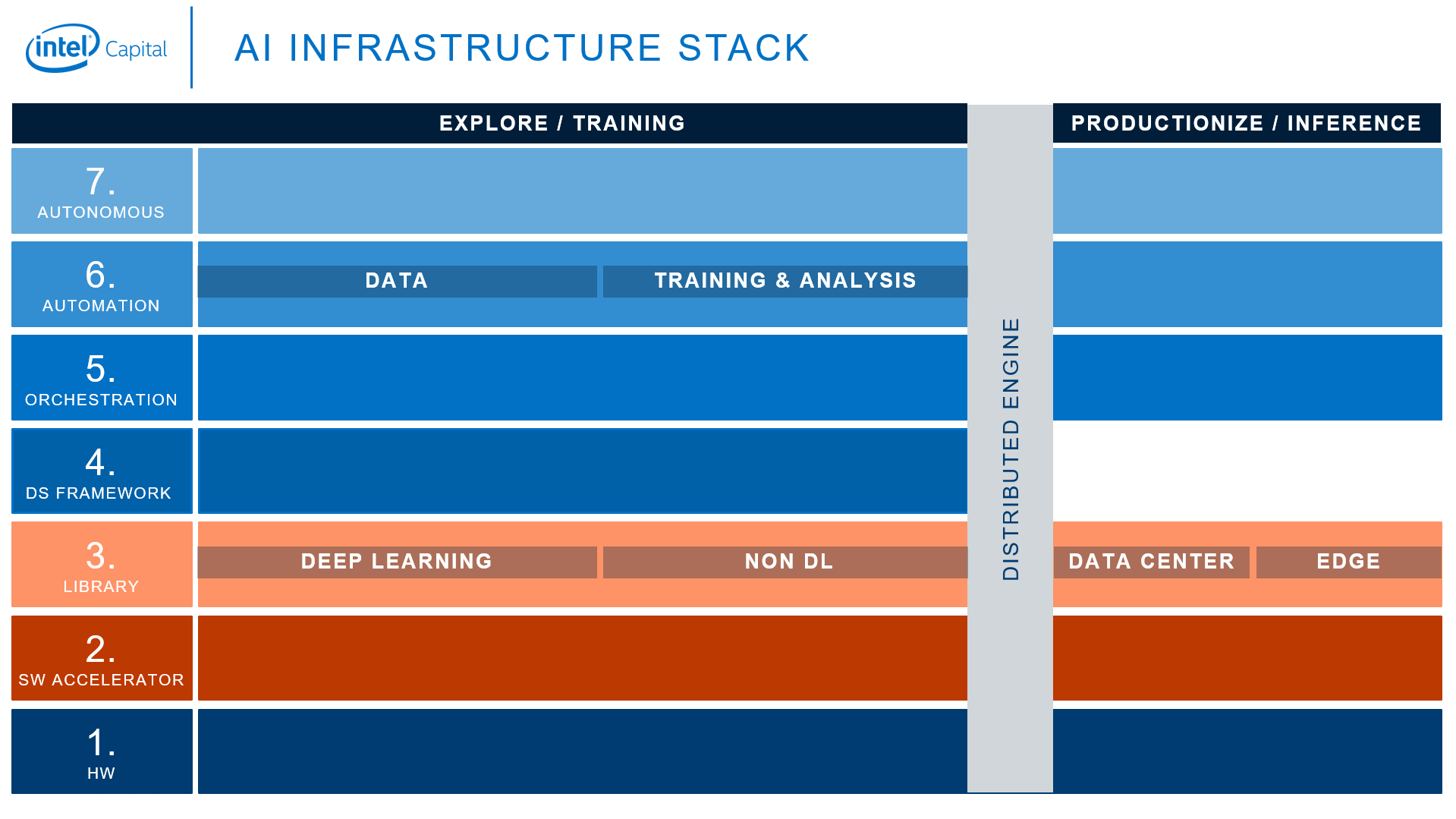

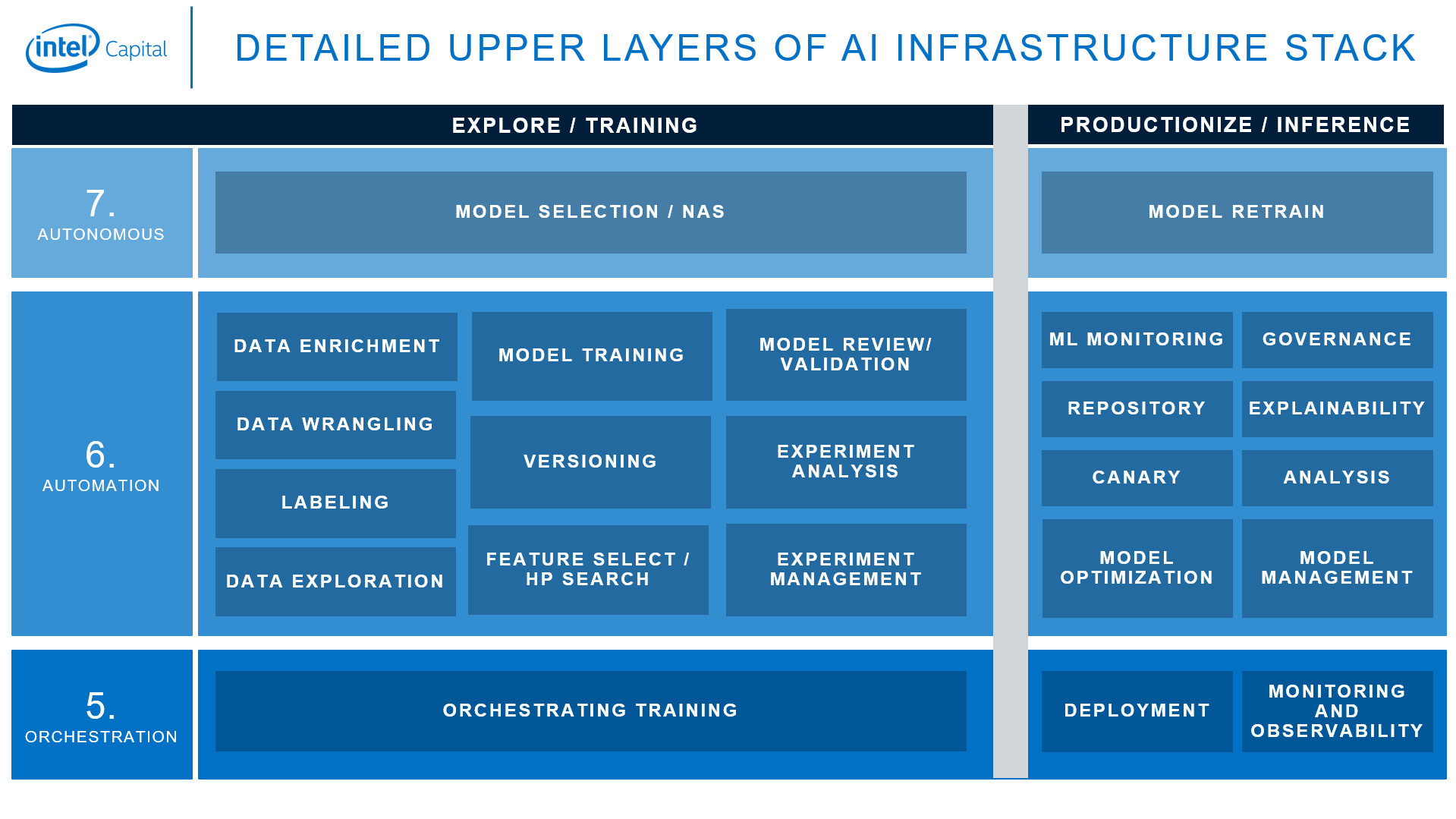

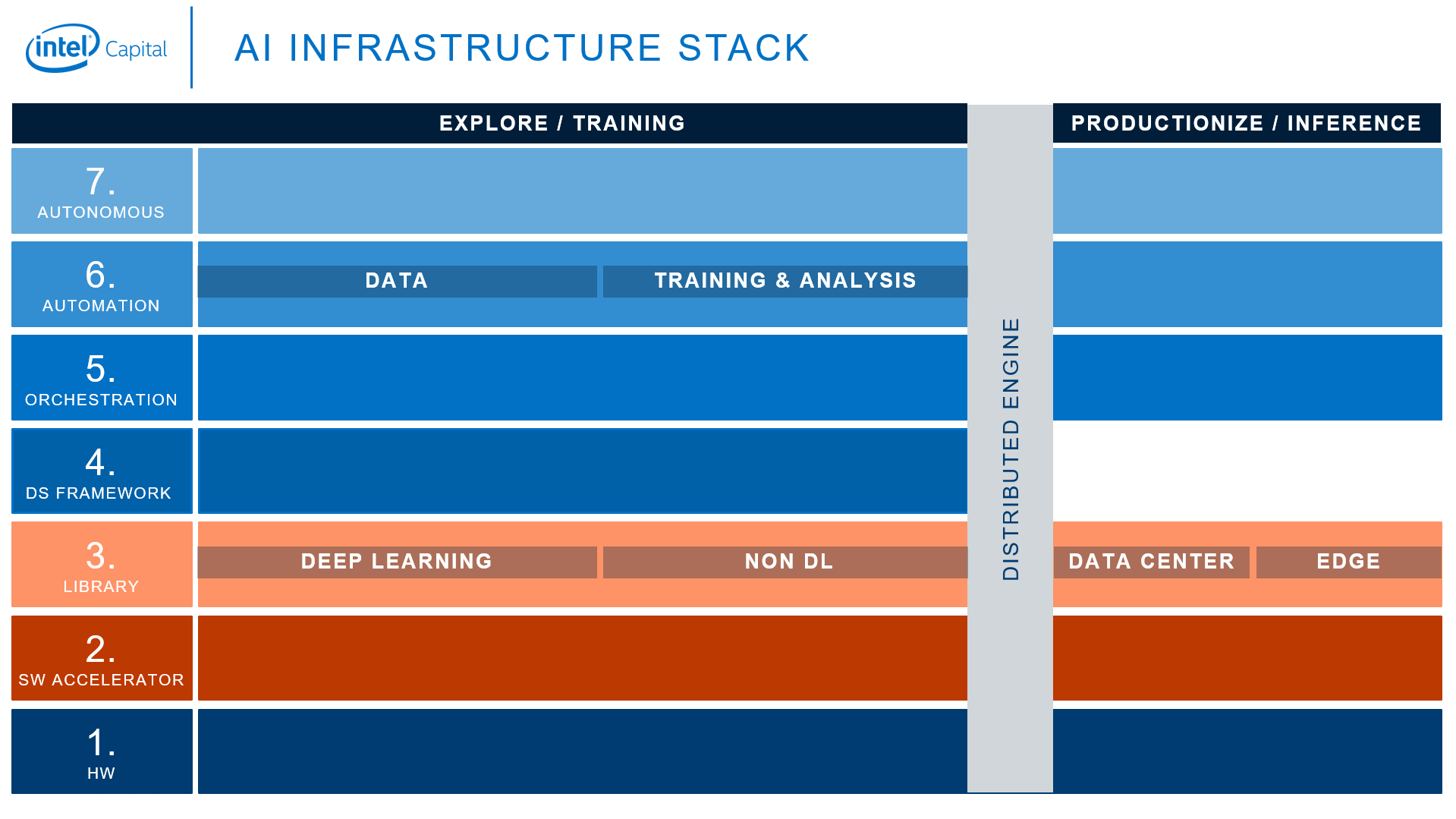

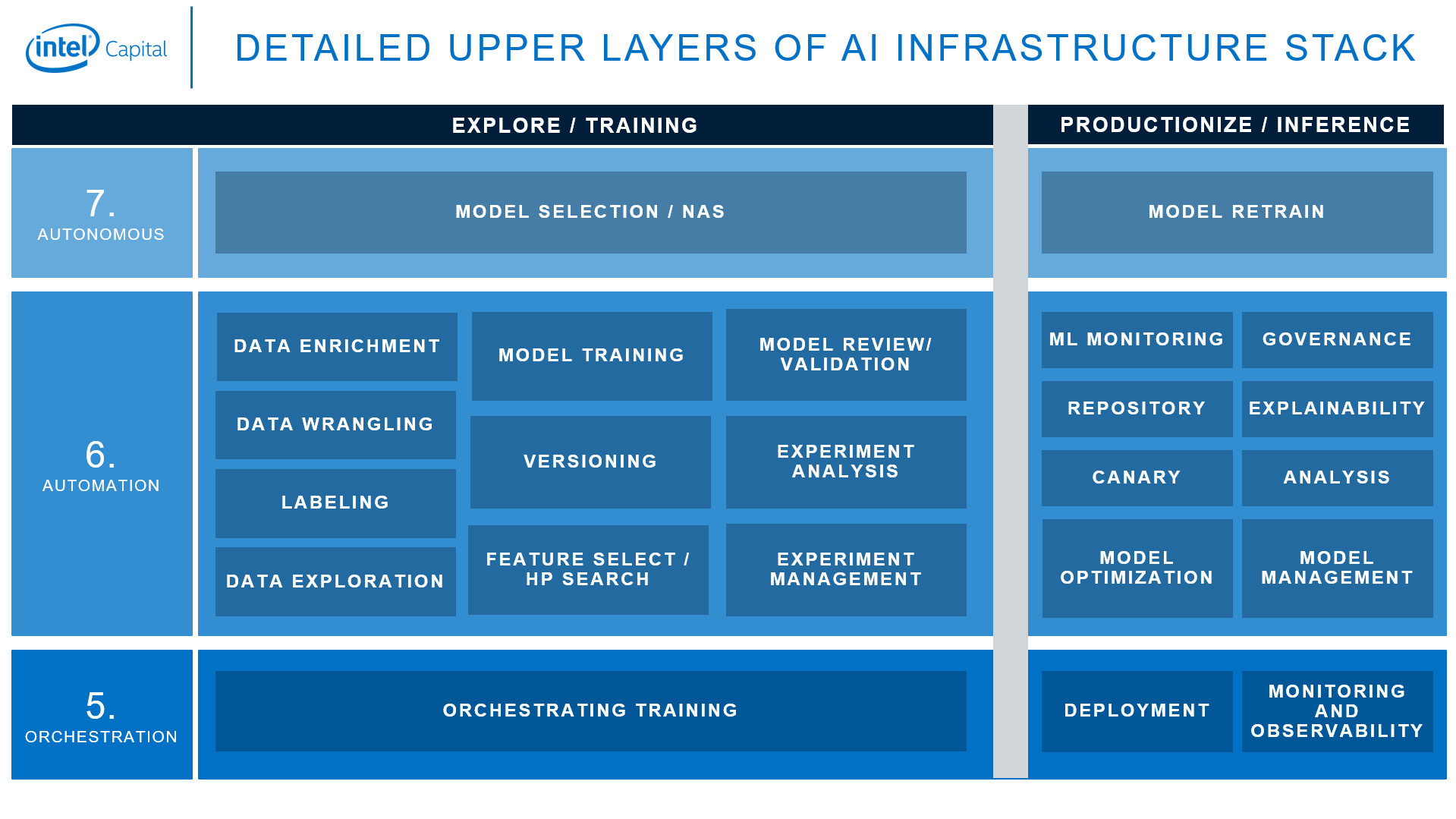

Full AI infrastructure stack from Intel Capital

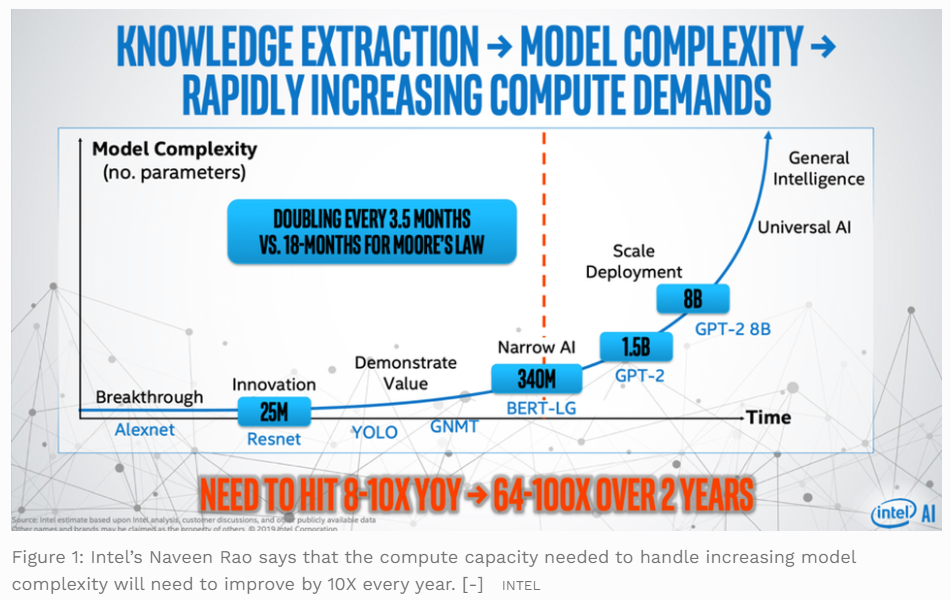

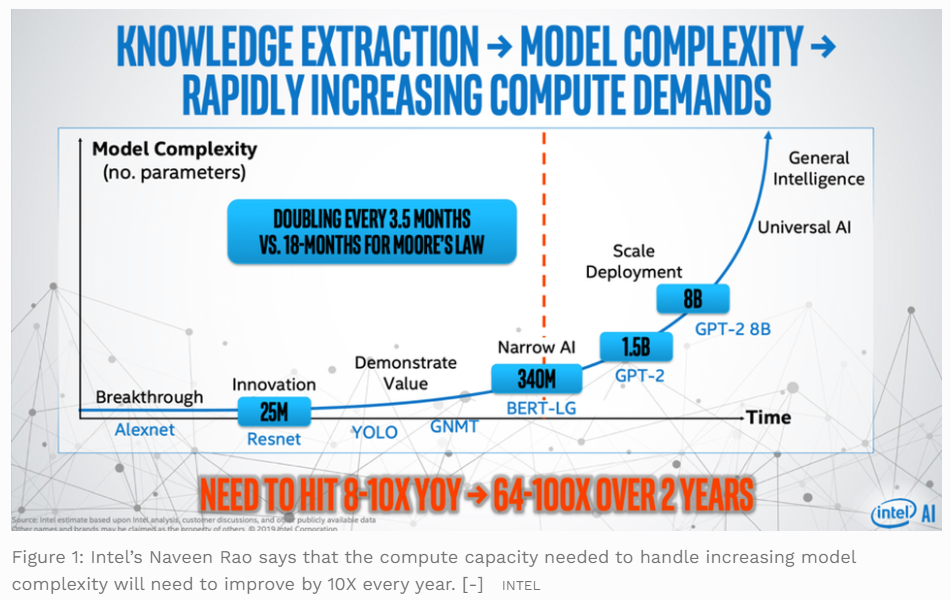

Intel’s Naveen Rao says that the compute capacity needed to handle increasing model will need to be improved by 10x every year, and to achieve the required 10X improvement every year it will take 2X advances in architecture, silicon, interconnect, software and packaging.

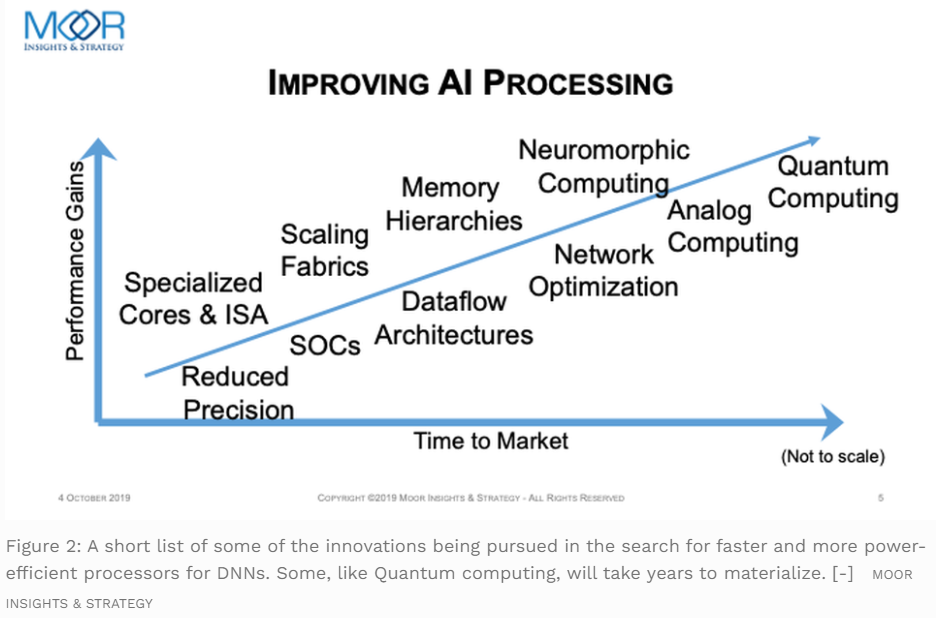

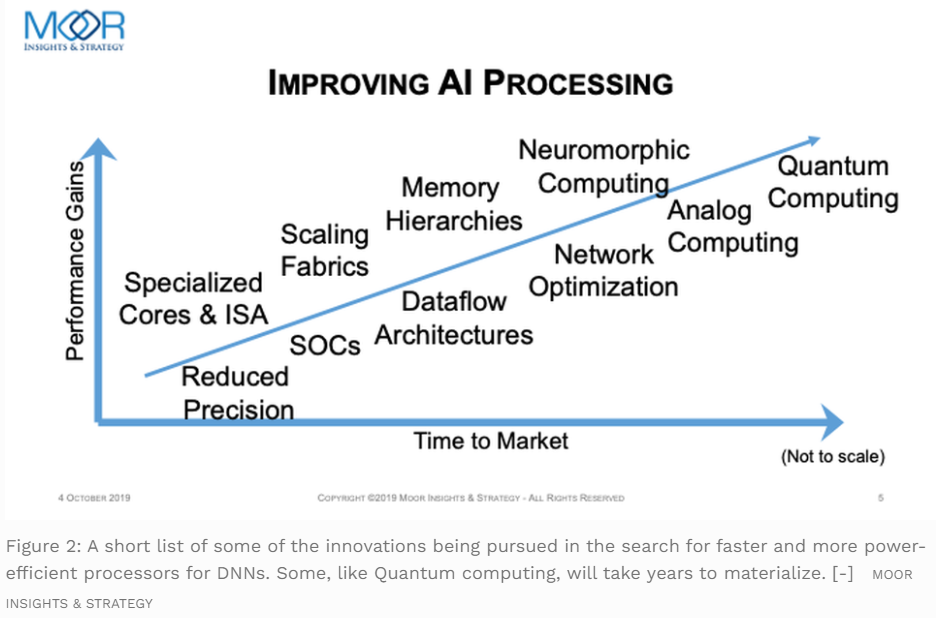

There are many innovations to improve performance, here Moor Insights & Strategy created a chart to highlight them (beyond the use of lower precision, tensor cores and arrays of MACs), but AI hardware is harder than it looks.

IBM also has a technology roadmap for the future of AI chips.

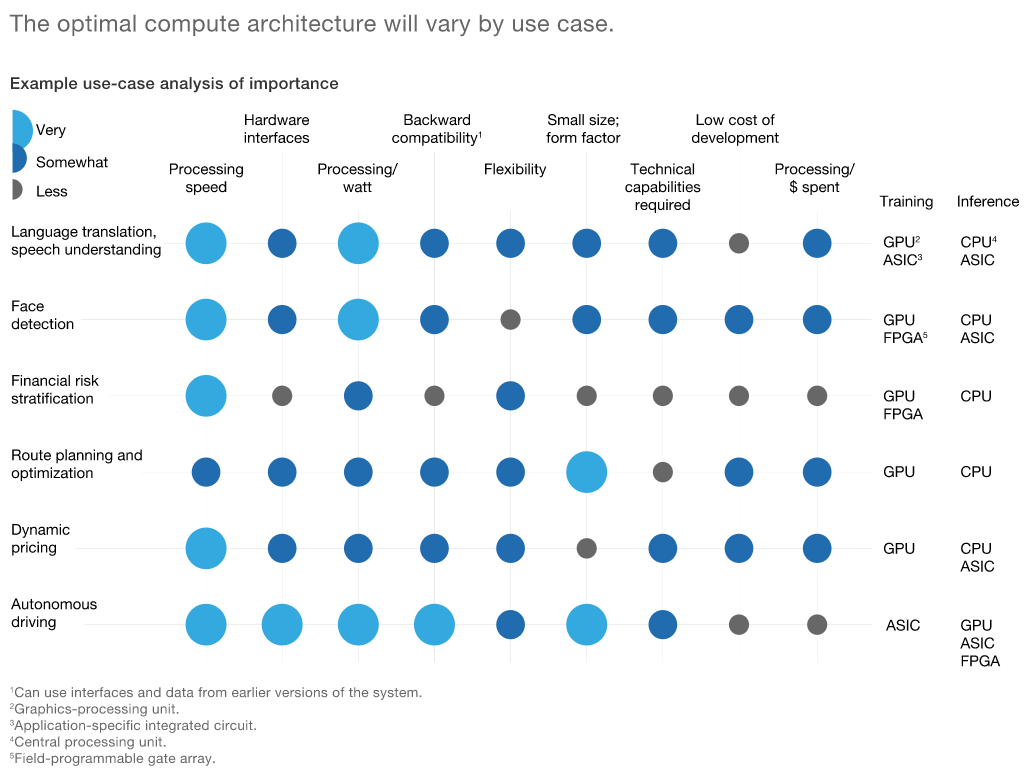

But, speed/performance isn’t the only key metric, it’s always about trade-offs between power, performance, and area (PPA) and optimize them for specific applications.

The trade-off between Performance (in GOP/sec) versus Power(image from IMEC and Beil8).

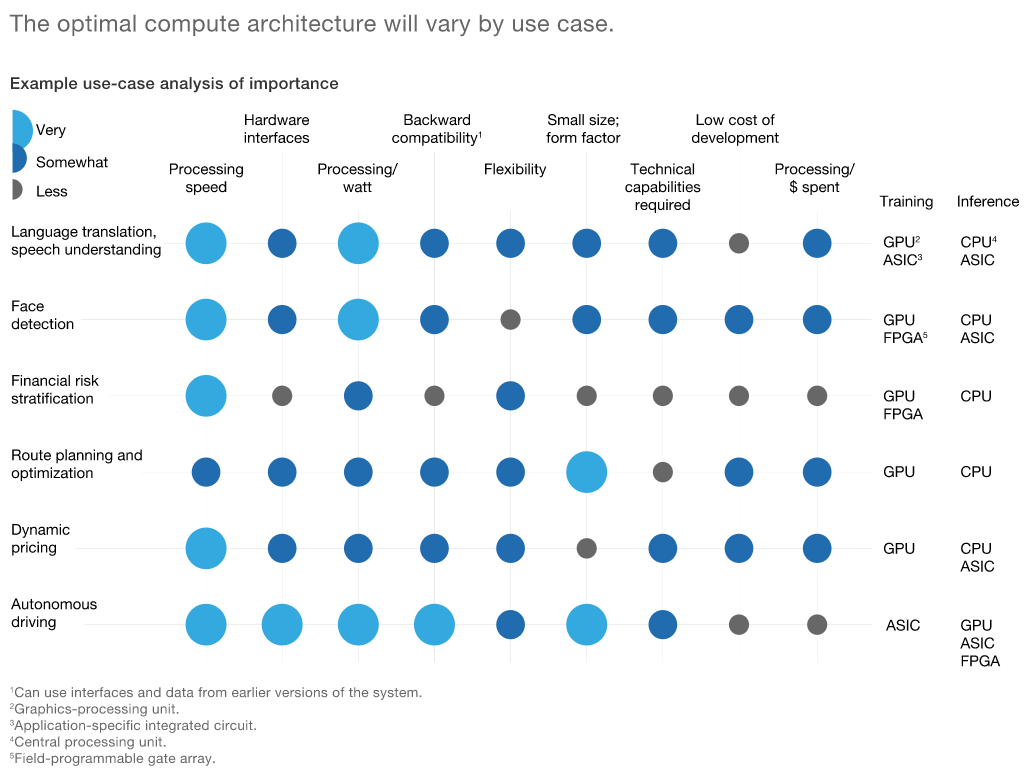

Feature requirements are different in different use cases and hence different choices of AI chip architectures. McKinsey makes some examples here.

McKinsey also made the conclusion that AI ASIC chips will have the biggest growth among all.

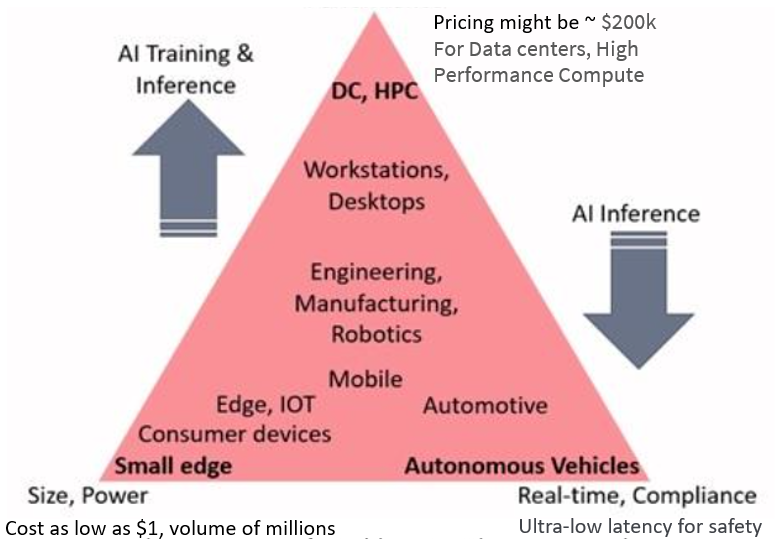

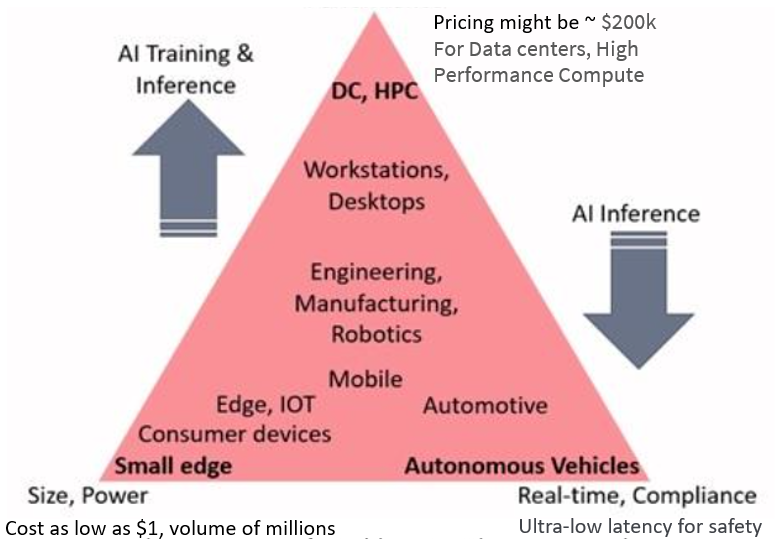

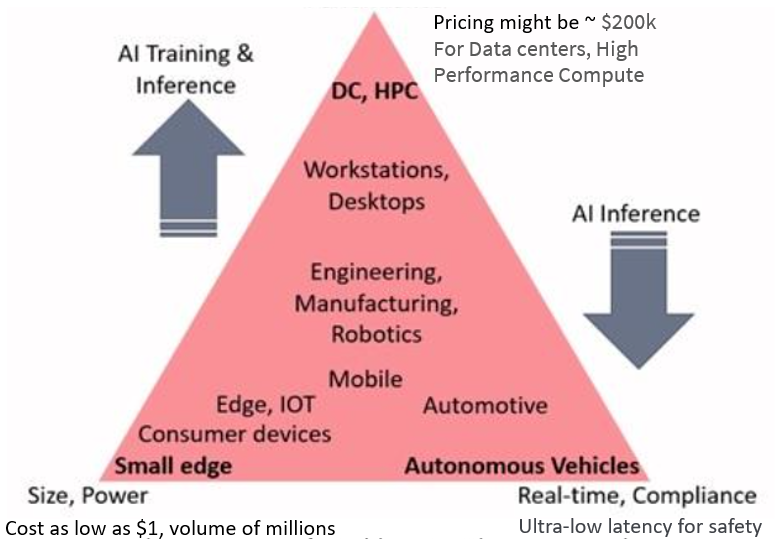

On the AI chip landscape, there are 80 startups globally attracted $10+ billion funding, and 34 established players. Most startups focus on inferencing, avoid competing with Nvidia. Kisaco Research created a conceptual triangle to represent the three major segments of AI accelerators.

The need for AI hardware accelerators has grown with the adoption of DL applications in real-time systems where there is need to accelerate DL computation to achieve low latency (less than 20ms) and ultra-low latency (1-10ms). DL applications in the small edge especially must meet a number of constraints: low latency and low power consumption, within the cost constraint of the small device. From a commercial viewpoint, the small edge is about selling millions of products and the cost of the AI chip component may be as low as $1, whereas a high-end GPU AI accelerator ‘box’ for the data center may have a price tag of $200k. (from Michael Azoff, Kisaco Research )

IMEC identified the rise of edge AI chip industry as one of five trends that will shape the future of the semiconductor technology landscape.

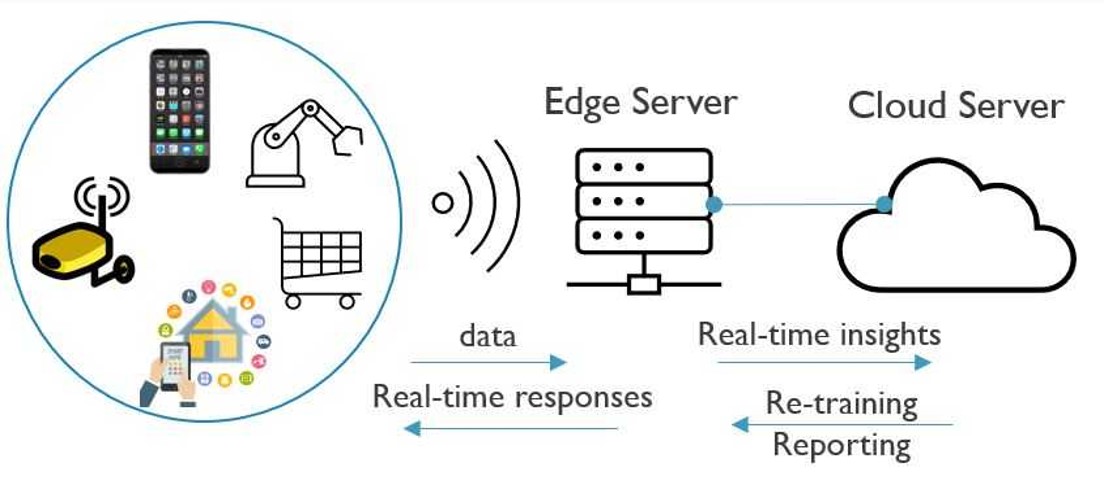

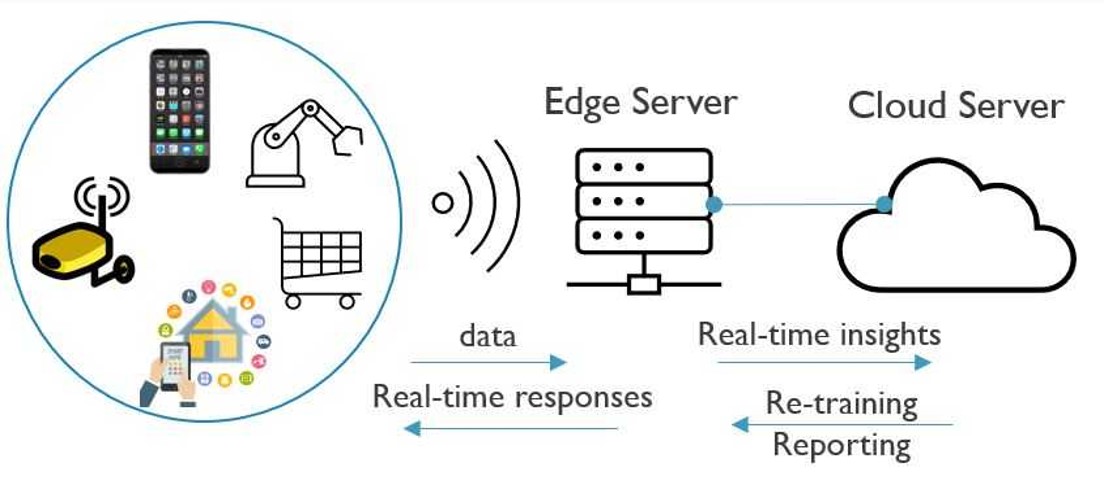

With an expected growth of above 100% in the next five years, edge AI is one of the biggest trends in the chip industry. As opposed to cloud-based AI, inference functions are embedded locally on the Internet of Things (IoT) endpoints that reside at the edge of the network, such as cell phones and smart speakers. The IoT devices communicate wirelessly with an edge server that is located relatively close. This server decides what data will be sent to the cloud server (typically, data needed for less time-sensitive tasks, such as re-training) and what data gets processed on the edge server.

Compared to cloud-based AI, in which data needs to move back and forth from the endpoints to the cloud server, edge AI addresses privacy concerns more easily. It also offers advantages of response speeds and reduced cloud server workloads. Just imagine an autonomous car that needs to make decisions based on AI. As decisions need to be made very quickly, the system cannot wait for data to travel to the server and back. Due to the power constraints typically imposed by battery-powered IoT devices, the inference engines in these IoT devices also need to be very energy efficient.

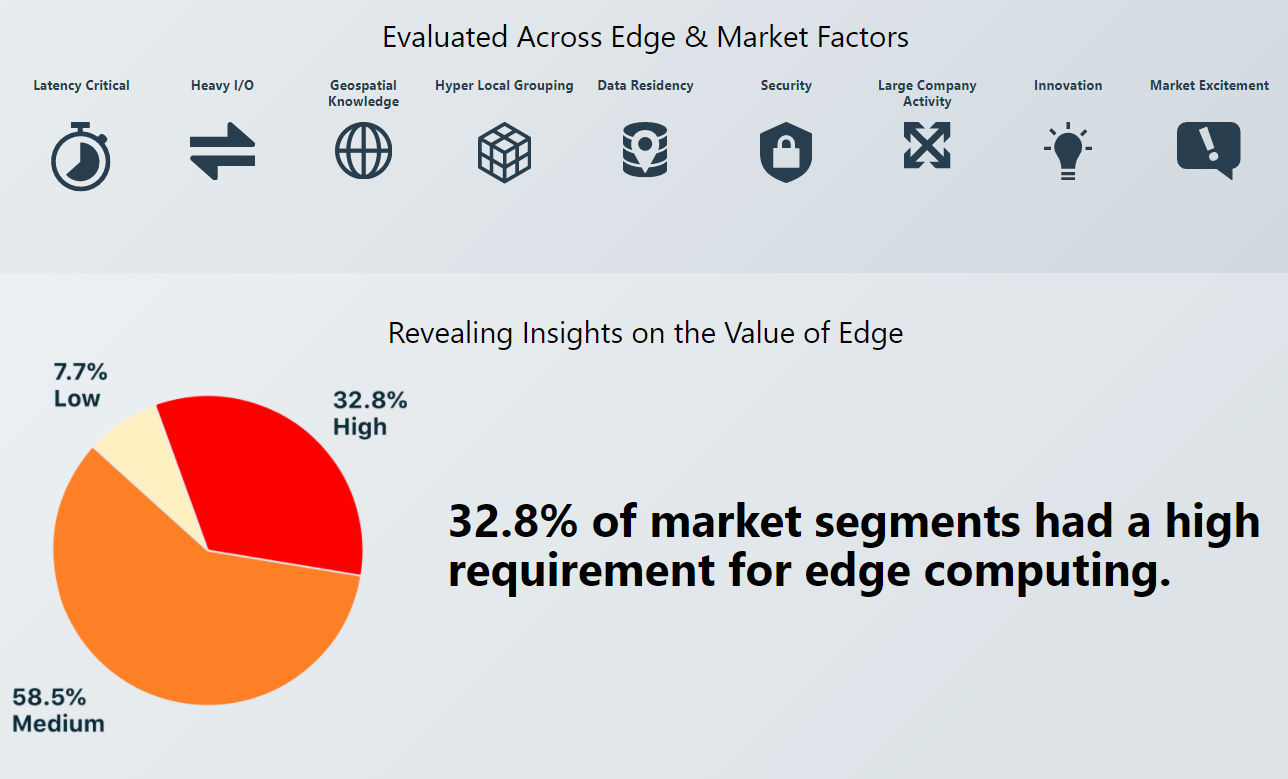

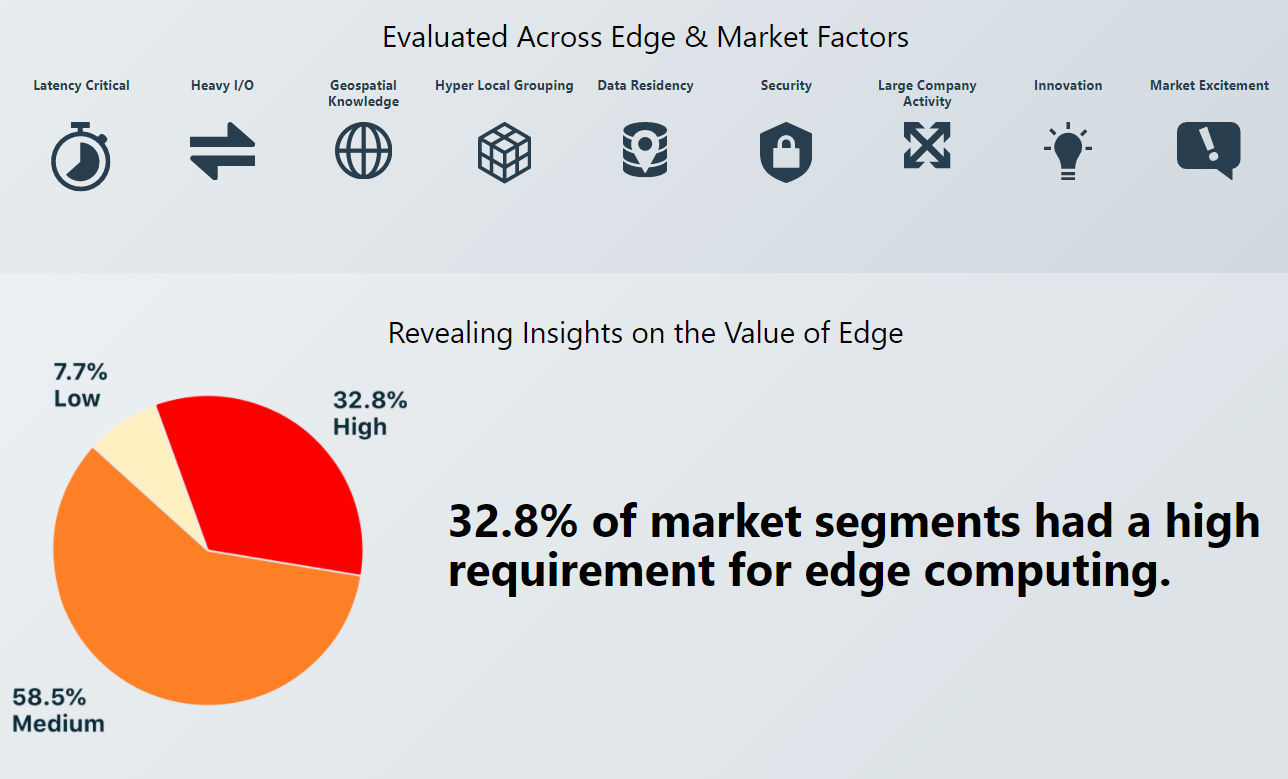

Edge computing is an emerging industry with very active activities (new companies, fundraising, and M&A) in 2020. MobiledgeX and Topio Networks, partnering with Seamster, embarked upon an exhaustive, multi-month research initiative to understand what market segments will benefit the most from edge computing. To identify the product-market-technology fit, check out the Edge Market Navigator.

The building or joining the industry ecosystems is life-or-death for AI chip companies, it’s the final products that matter, not only the AI chip metrics. The overall performance of final AI applications needs other components (like data) to be built and play out well with the AI chips. Big gains in performance are only going to come through an entire systems approach. Data movement on and off chip is a bottleneck, computation is just a component of a total systems approach to the solution.

“Investors today are looking at the semiconductor world…I think AI is pretty clearly going to be the next things that’s going to really scale up and drive the semi-conductor industry, so nothing attracts opportunity like AI at the moment.” — Brett Simpson, Partner & Co-Founder, Arete Research (from AI Hardware Summit hosted by Kisaco Research)

“Developing deep learning models is a bit like being a software developer 40 years ago. You have to worry about the hardware and the hardware is changing quite quickly… Being at the forefront of deep learning also involves being at the forefront of what hardware can do.” — Phil Blunsom, Department of Computer Science at Oxford University and DeepMind (from AI Hardware Summit hosted by Kisaco Research)

Note: To get a full landscape/list of AI chip companies, contact us for a free resource. (Contact[at]WiseOcean[dot]Tech)

by WiseOcean | Jun 14, 2020 | AI Chip

The investment in Artificial Intelligence (AI) chips has seen heated over the past few years including from big titans in software territory after they found the computing hardware had become a bottleneck for the heavy lifting of Machine Learning. The reasons include:

- The traditional processor architecture is inefficient for data-heavy workload,

- The slowing of Moore’s Law (which had dominated computing performance for decades, now it’s not a sole decisive factor),

- Different uses (for inference, training, or real-time continuous learning),

- Diversified and distributed AI computing (extreme low-power for IoT edge computing or super high throughput for complex Deep Learning algorithms),

- A coherent optimization for software and hardware full stack is necessary.

There are literally hundreds, if not thousands, of fabless AI chip startups, and dozens of programs in established semiconductor companies to create AI chips or embed AI technology in other parts of the product line. (source: Cadence blog)

An array of innovations in AI chip designs have been developed, some already shipped. For example, memory efficiency, or in-memory processing capability, has become a essential feature to handle data-heavy AI workloads. New generation architectures aim to reduce the delay in getting data to the processing point. Several strategies are available from near-memory to processor-in-memory (PIM) designs.

A popular benchmark to compare their performance is TOPS (tera operations per second) — an ideal metric for pure performance. It has grown orders of magnitude over the last couple of years. But TOPS is far from the whole story of the real performance when those AI chips implemented in real applications. Considering the explosion of data, the huge energy consumption of AI computing raising an environmental concern, and extremely low power needs at the edge AI, comparing the highest performance per watt is more meaningful.

There are many ways to improve performance per watt, and not just in hardware or software. Kunle Olukotun, Cadence Design Systems Professor of electrical engineering and computer science at Stanford University, said that relaxing precision, synchronization and cache coherence can reduce the amount of data that needs to be sent back and forth. That can be reduced even further by domain-specific languages, which do not require translation. (more discussion here)

An important consideration of AI and other high-performance devices is the fact that actual performance is not known until the end application is run. This raises questions for many AI processor startups that insist they can build a better hardware accelerator for matrix math and other AI algorithms than others.

All inference accelerators today are programmable because customers believe their model will evolve over time. This programmability will allow them to take advantage of enhancements in the future, something that would not be possible with hard-wired accelerators. However, customers want this programmability in a way where they can get the most throughput for a certain cost, and for a certain amount of power. This means they have to use the hardware very efficiently. The only way to do this is to design the software in parallel with the hardware to make sure they work together very well to achieve the maximum throughput.

One of the biggest problems today is that companies find themselves with an inference chip that has lots of MACs (multiplier–accumulator) and tons of memory, but actual throughput on real-world models is lower than expected because much of the hardware is idling. In almost every case, the problem is that the software work was done after the hardware was built. During the development phase, designers have to make many architectural tradeoffs and they can’t possibly do those tradeoffs without working with both the hardware and software — and this needs to be done early on. Chip designers then build a performance estimation model to determine how different amounts of memory, MACs, and DRAM would change relevant throughput and die size; and how the compute units need to coordinate for different kinds of models.

Semiconductor companies and AI computing players need to coalesce their strategy around hardware innovations and software that reduces the complexities and offers a wide appeal to the developer ecosystem which proved to be crucial for the success of IP or chip vendors in decades of IC industry history.

To cultivate a user community and integrate well with the wider ecosystem, hardware players need not only to offer great interfaces and software suite compatible with popular frameworks/libraries (such as TensorFlow, MXNet) but also closely follow models’ evolution/trends or application needs to respond accordingly. Besides, the programming development environment needs to support programming and balancing workloads across different types of microprocessors suitable for different algorithms (heavily scalar, vector, or matrix, etc.).

Note: If you need to learn about AI chip basics and economics around them, a report published in April 2020, from the Center of Security and Emerging Technology is a good read — AI Chips: What They Are and Why They Matter.