Here is an introduction to the AI chip industry landscape and highlights with selected infographics and resources.

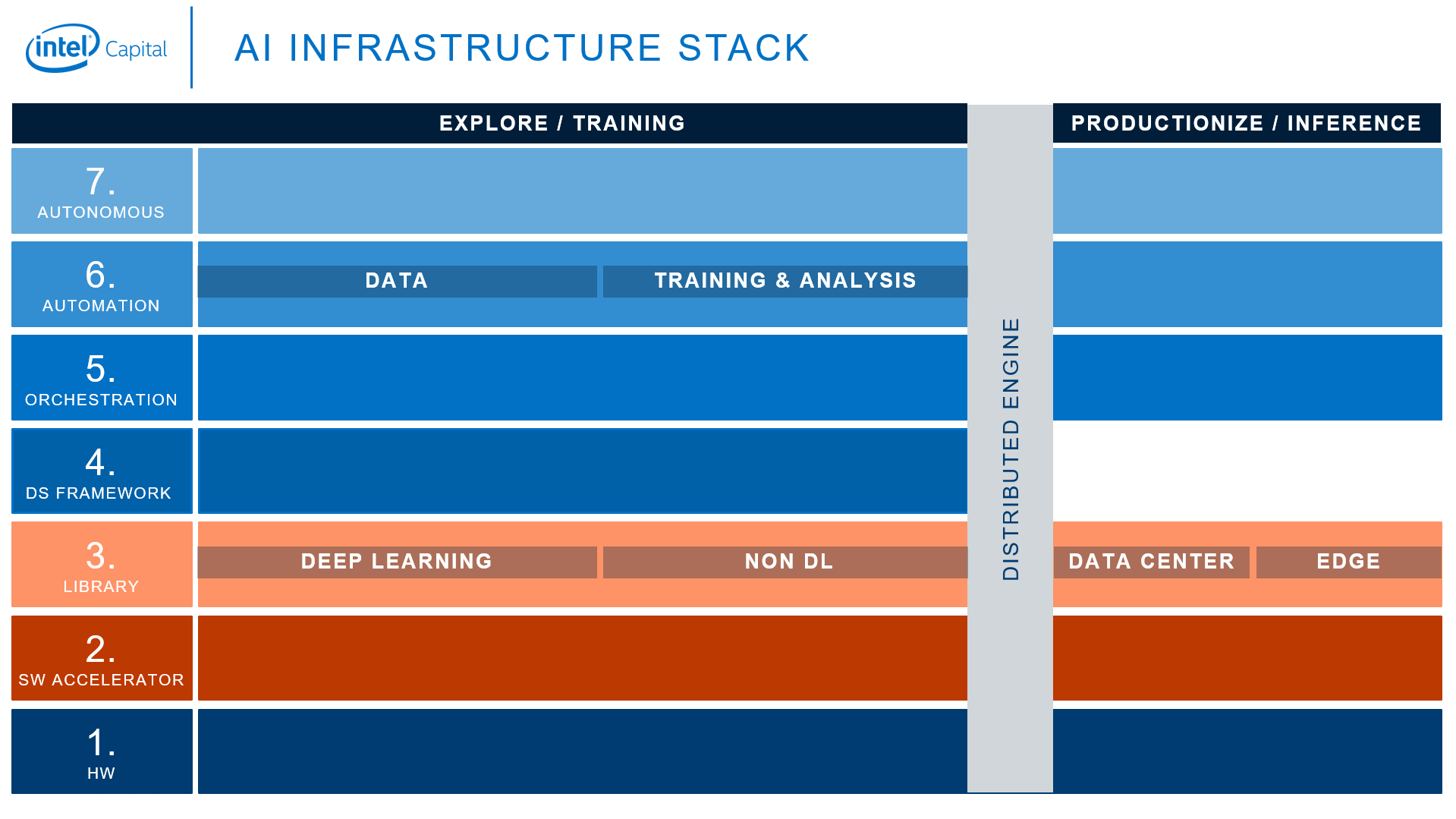

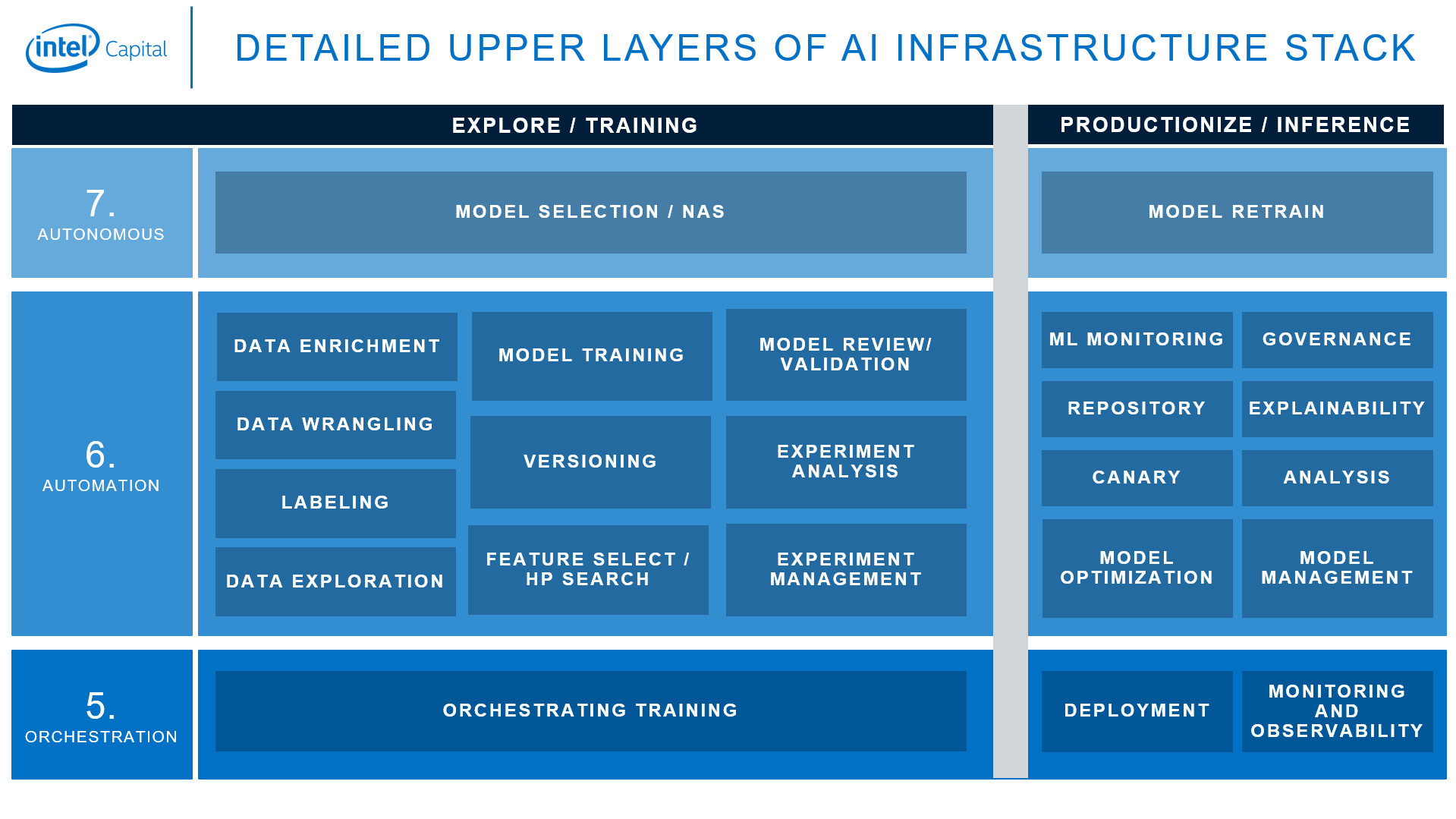

Full AI infrastructure stack from Intel Capital

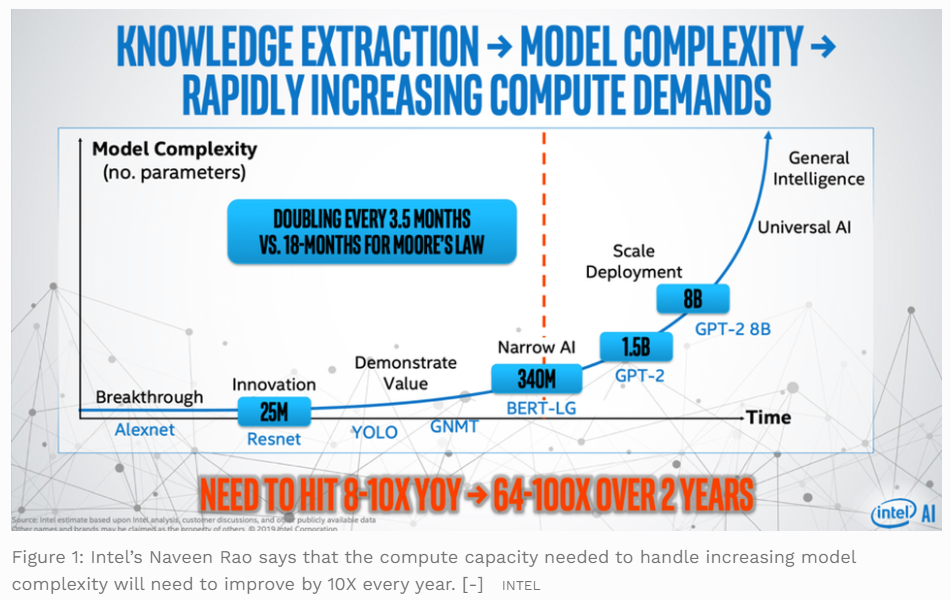

Intel’s Naveen Rao says that the compute capacity needed to handle increasing model will need to be improved by 10x every year, and to achieve the required 10X improvement every year it will take 2X advances in architecture, silicon, interconnect, software and packaging.

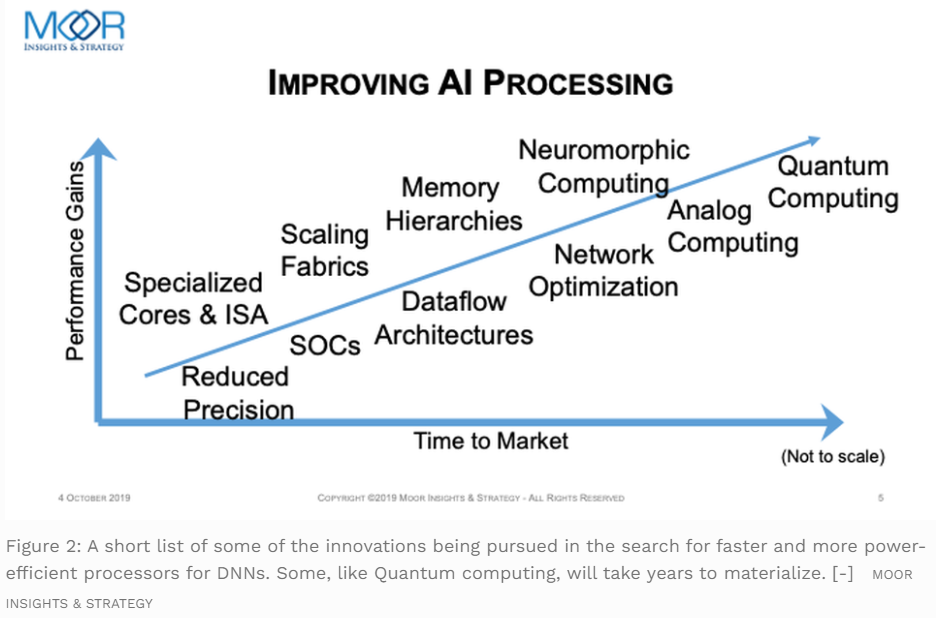

There are many innovations to improve performance, here Moor Insights & Strategy created a chart to highlight them (beyond the use of lower precision, tensor cores and arrays of MACs), but AI hardware is harder than it looks.

IBM also has a technology roadmap for the future of AI chips.

But, speed/performance isn’t the only key metric, it’s always about trade-offs between power, performance, and area (PPA) and optimize them for specific applications.

The trade-off between Performance (in GOP/sec) versus Power(image from IMEC and Beil8).

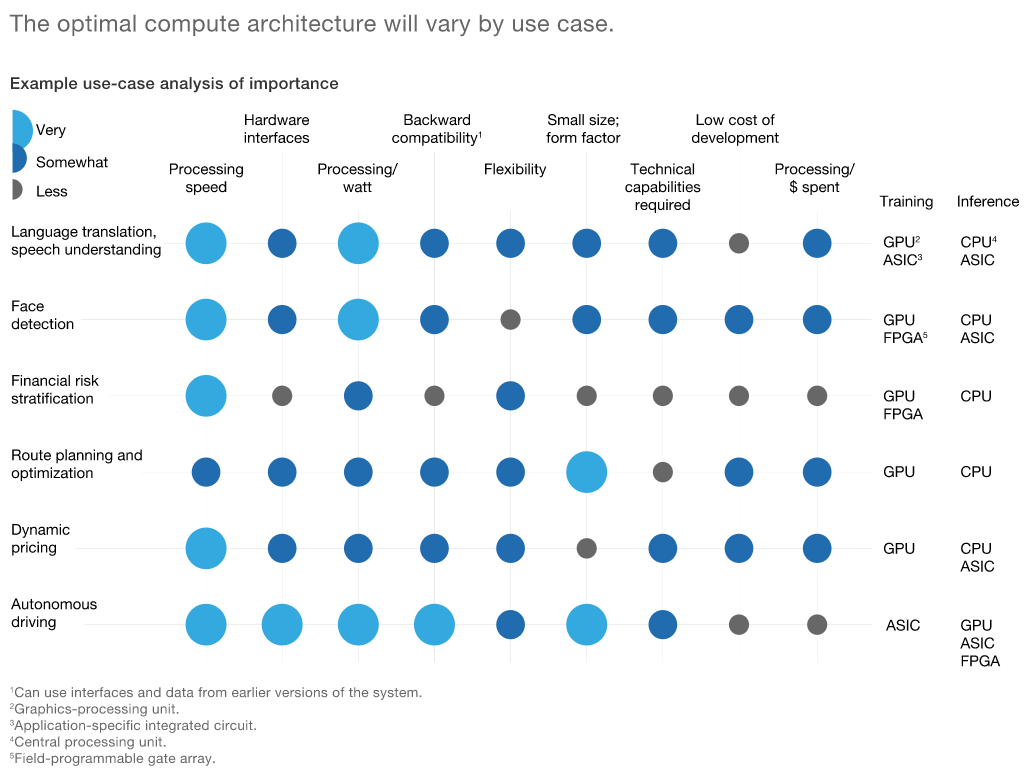

Feature requirements are different in different use cases and hence different choices of AI chip architectures. McKinsey makes some examples here.

McKinsey also made the conclusion that AI ASIC chips will have the biggest growth among all.

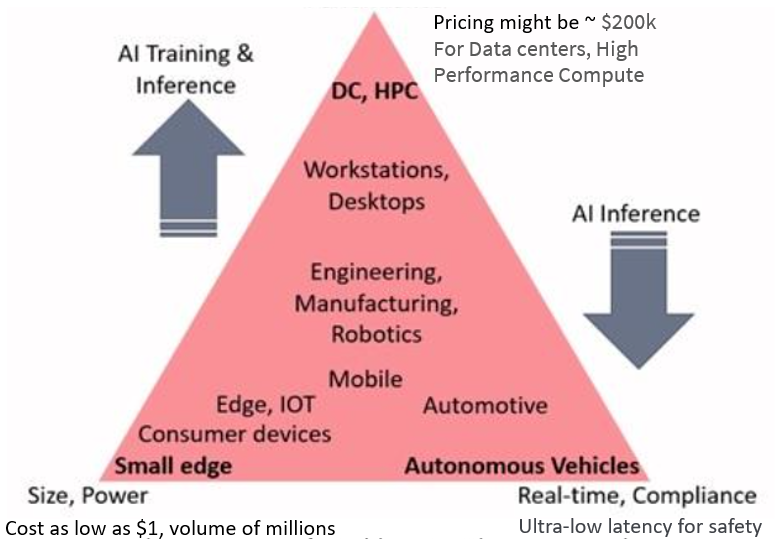

On the AI chip landscape, there are 80 startups globally attracted $10+ billion funding, and 34 established players. Most startups focus on inferencing, avoid competing with Nvidia. Kisaco Research created a conceptual triangle to represent the three major segments of AI accelerators.

The need for AI hardware accelerators has grown with the adoption of DL applications in real-time systems where there is need to accelerate DL computation to achieve low latency (less than 20ms) and ultra-low latency (1-10ms). DL applications in the small edge especially must meet a number of constraints: low latency and low power consumption, within the cost constraint of the small device. From a commercial viewpoint, the small edge is about selling millions of products and the cost of the AI chip component may be as low as $1, whereas a high-end GPU AI accelerator ‘box’ for the data center may have a price tag of $200k. (from Michael Azoff, Kisaco Research )

IMEC identified the rise of edge AI chip industry as one of five trends that will shape the future of the semiconductor technology landscape.

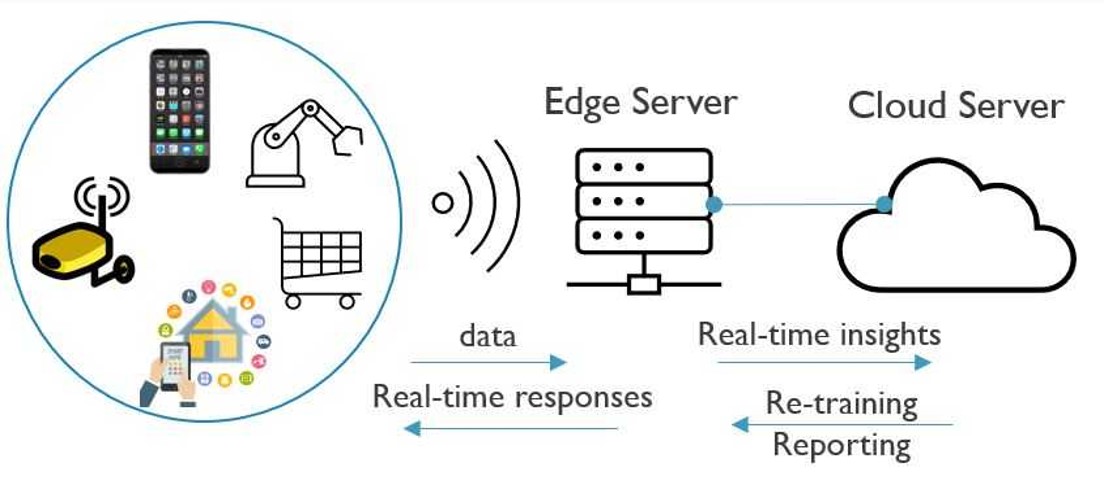

With an expected growth of above 100% in the next five years, edge AI is one of the biggest trends in the chip industry. As opposed to cloud-based AI, inference functions are embedded locally on the Internet of Things (IoT) endpoints that reside at the edge of the network, such as cell phones and smart speakers. The IoT devices communicate wirelessly with an edge server that is located relatively close. This server decides what data will be sent to the cloud server (typically, data needed for less time-sensitive tasks, such as re-training) and what data gets processed on the edge server.

Compared to cloud-based AI, in which data needs to move back and forth from the endpoints to the cloud server, edge AI addresses privacy concerns more easily. It also offers advantages of response speeds and reduced cloud server workloads. Just imagine an autonomous car that needs to make decisions based on AI. As decisions need to be made very quickly, the system cannot wait for data to travel to the server and back. Due to the power constraints typically imposed by battery-powered IoT devices, the inference engines in these IoT devices also need to be very energy efficient.

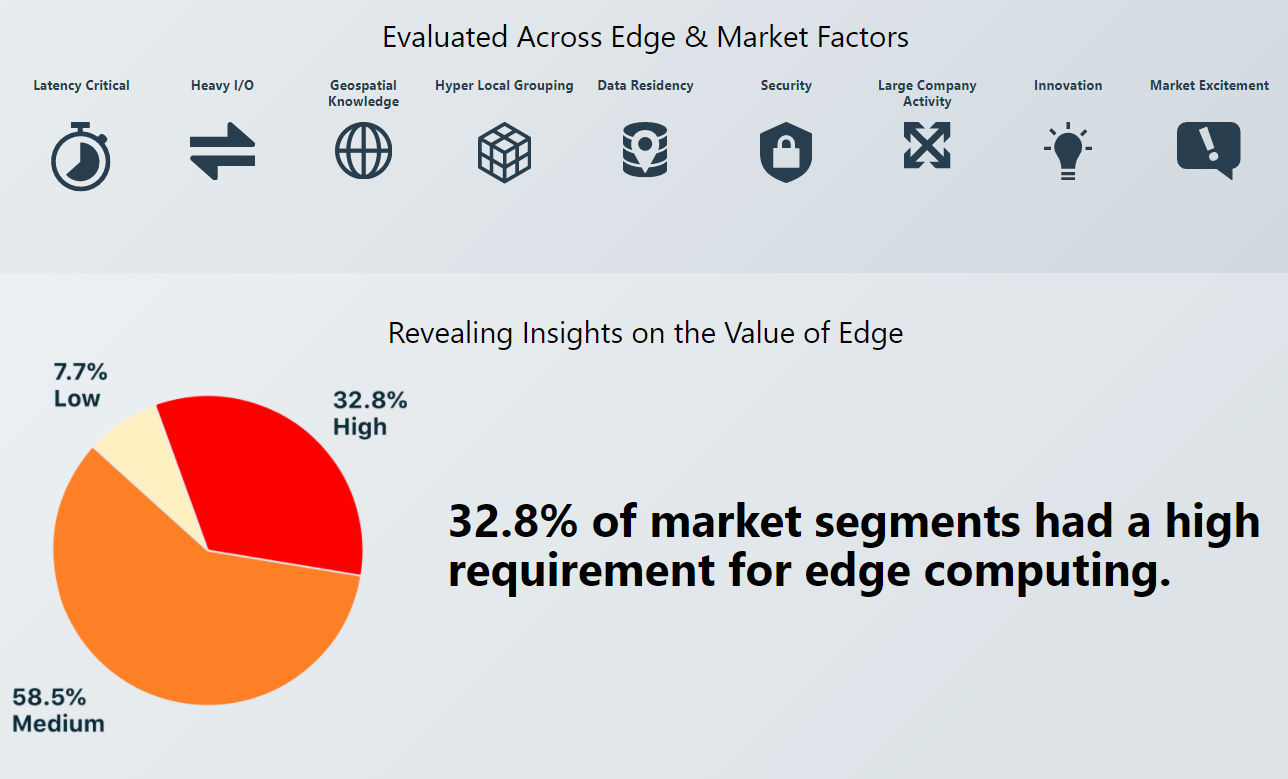

Edge computing is an emerging industry with very active activities (new companies, fundraising, and M&A) in 2020. MobiledgeX and Topio Networks, partnering with Seamster, embarked upon an exhaustive, multi-month research initiative to understand what market segments will benefit the most from edge computing. To identify the product-market-technology fit, check out the Edge Market Navigator.

The building or joining the industry ecosystems is life-or-death for AI chip companies, it’s the final products that matter, not only the AI chip metrics. The overall performance of final AI applications needs other components (like data) to be built and play out well with the AI chips. Big gains in performance are only going to come through an entire systems approach. Data movement on and off chip is a bottleneck, computation is just a component of a total systems approach to the solution.

“Investors today are looking at the semiconductor world…I think AI is pretty clearly going to be the next things that’s going to really scale up and drive the semi-conductor industry, so nothing attracts opportunity like AI at the moment.” — Brett Simpson, Partner & Co-Founder, Arete Research (from AI Hardware Summit hosted by Kisaco Research)

“Developing deep learning models is a bit like being a software developer 40 years ago. You have to worry about the hardware and the hardware is changing quite quickly… Being at the forefront of deep learning also involves being at the forefront of what hardware can do.” — Phil Blunsom, Department of Computer Science at Oxford University and DeepMind (from AI Hardware Summit hosted by Kisaco Research)

Note: To get a full landscape/list of AI chip companies, contact us for a free resource. (Contact[at]WiseOcean[dot]Tech)